Suppose you’re considering a move to a new office or finding that the cloud doesn’t meet your needs in terms of cost, flexibility, and security. In that case, it’s important to be prepared to establish a small data center at the edge. Another excellent option to explore is local colocation for distributed connectivity.

Insights on Building a Data Center

- Enable success within the environment: It’s crucial to create a reliable infrastructure that doesn’t place the burden of reliability solely on your IT staff.

- Prioritize safety and simplicity in design.

- Implement fault-tolerant measures.

- Ensure the design allows for reasonable scalability.

This comprehensive guide presents a recommended reference architecture for corporate data centers to ensure success.

Building vs Buying

Before delving into the key factors of constructing a successful data center, let’s first address the debate of building versus buying and what to avoid when establishing your facility. The decision of building versus buying ultimately depends on your requirements.

If you have specific needs that necessitate an on-premises solution, you may need more than a colocation to fulfill them. Consider edge computing, which requires an on-site setup to function effectively. Another crucial consideration is scale.

At what point does it become more commercially viable to invest in building a data center rather than purchasing one? The threshold usually lies around 2-3 megawatts. Anything below this limit rarely justifies the cost of construction. This explains why you rarely come across facilities running below this capacity.

Therefore, when contemplating the construction of your own data center, we recommend keeping everything within the 2-3 megawatt range only if there is a strict on-premises requirement. Staying updated with the latest developments in data center design can take time and effort for enterprise users.

Cost Considerations for Building a Data Center

When evaluating your data center options and selecting the most suitable one for your company’s specific needs, there are several factors to consider:

- Physical Space Requirements: Consider the physical footprint you will require. Once you clearly understand this aspect, you can estimate the associated costs of your colocation data center. This is an excellent starting point if you still determine your power requirements. Assess your existing racks and anticipate future needs. High-density loads, such as blade chassis and other devices, might place you in the “Heavily Loaded Cabinet” or “Moderately Loaded Cabinet” categories, which can impact costs.

- Network Requirements: The costs of different network providers can vary significantly. Pricing is contingent upon efficiency and individual business requirements. It is important to consider economies of scale when assessing network provider costs, as they can have a substantial impact. Heavy network users typically have access to more cost-effective options. For most businesses, a few hundred Mbps is sufficient and can be obtained at around the $250 per month price point, with room for expansion as the company grows.

- Service Levels: The level of service you opt for will inevitably influence pricing, so it is another aspect to consider. Many data centers offer a completely hands-off approach. While this may reduce costs, it also means a heavier workload for your team. If you prefer service staff support, with a fully managed service and options such as remote hands available, you will likely be looking at a slightly higher price point. However, the experience provided will be far superior, and your team will have more time to focus on other tasks.

- Cost Components of Colocation: Be aware of potential hidden costs associated with certain data centers. These costs may include cross-connect fees, additional packages like remote hands, and unanticipated network expenses. When combined, these factors can significantly increase the overall price of the data center. Consequently, fully managed data centers often prove more cost-effective in the long run.

Steps, Considerations & Specifications

This section will delve into the various steps, considerations, and specifications crucial to the process.

Electrical Layout and Descriptions

When building a data center, prioritize performance and reliability. For the electrical layout, use dedicated and redundant FR3 fluid-filled transformers for primary utility entrances. If separate transformers aren’t possible, accommodate two feeds from the same “utility building source” as the primary load.

The central entry point to the data center should serve as the switchgear for each leg of the 2N infrastructure, regardless of feeds from the same “utility building source.” Incorporate an inbound TVSS (Transient Voltage Surge Suppression System) on the line side of the ATS for both utility and generator.

Note that utility diversity doesn’t affect tier ratings. Focus on achieving emergency backup generation redundancy at a controllable point of differentiation rather than relying on extensive utility systems. The key concept is to have two separate switchgear lineups and backup power generation systems for the 2N system, regardless of where the feeds come from.

Ideally, the switchgear should have a two-breaker pair, although an “ATS” with a transition switch may be acceptable. The TVSS system should have an indicator light. Each leg of the 2N infrastructure needs its independent lineup of switchgear, distribution, and UPS.

In some cases, generators back up the entire building. This may be considered as one generator source if adequately designed. Implement a UPS + distribution system below that to complete one leg of the 2N infrastructure. For the final design, provide two distinct backup cords to each rack using an entirely separate switchgear, generator, and UPS lineup.

Although this different system may use the building source as its “utility source,” don’t count the same generator system twice, necessitating a backup generator for the redundant feed.

In smaller data centers, it’s possible to house redundant switchgear and critical systems in the same room. However, in larger setups, maintain physical separation and isolation. Ensure physically diverse and isolated pathways to and from gear, including routes to backup generation, avoiding single corridors. A minimum requirement for physical separation between redundant feeds is a 1-hour rated firewall.

Overall, prefer physical, electrical, and logical isolation between redundant components, avoiding practices like paralleling, N+1 common bus, or main-tie-main configurations.

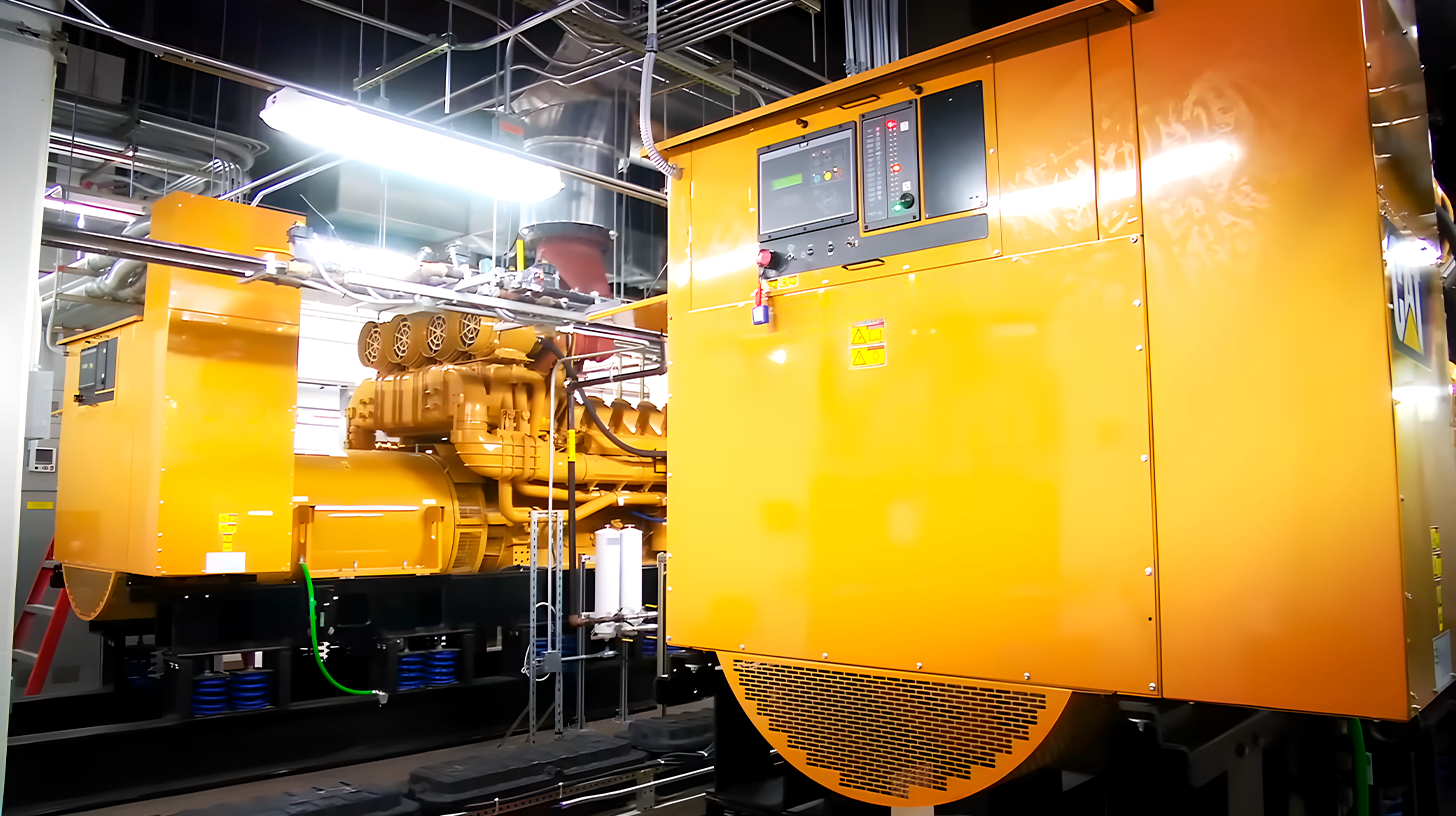

Backup Power and Generation

For a 2N system, two separate generators should independently supply power to each switchgear. One generator should be diesel, while the other should use natural gas. Diverse fuel sources are chosen to minimize range anxiety and simplify fuel delivery logistics. The natural gas generator should be capable of a “rich start” in 30 seconds or less. Physical isolation of the generators is preferred, either by placing them on separate sides of the building or with sufficient distance between them.

The diesel generator should have fuel on-site for four days (96 hours), while the natural gas generator should have a minimum of 12 hours of fuel reserve stored as propane. Both generators should be able to run indefinitely during any outage. It is recommended to stay within 70% of the prime rating of the generator under peak conditions. The generators should be ISO 8528-1 rated.

Switching and Distribution

Each leg of the 2N system should have its power distribution lineup. Comprehensive distribution should be provided, with two of everything down to two cords to each rack, ensuring no intersections or common bus. Preferably, there should be two layers of ground fault protection, with the neutral carrying at least to the second distribution level. Each main distribution should feed its mechanical-only panel and UPS/data center distribution panel.

All inbound feeds from the exterior to the data center, including exterior utilities, mechanical load outside of the room, and feeds from the main building, should have a transient voltage surge suppression system in line with the main feed. The mechanical panel may also have a TVSS system in line.

UPS Systems (Uninterruptible Power Supplies)

Double Online Conversion systems are the preferred choice. For small data centers, in-rack UPS systems are acceptable. Each rack should have two double online conversion systems (2N) fed from each upstream power source (2N). They should not be paralleled in any way. Dedicated stand-alone units should be in separate electrical rooms.

Like other distribution elements, the units should be at most 80% under the worst-case failure scenario when fully loaded. There should be a minimum of two separate UPS systems, each fed by a different utility feed.

Battery cabinets can be problematic, especially in small data centers. Invest in individual cabinets with the right size UPS units in a 2N configuration. With two rack mount UPS units per cabinet fed from different 2N feeds, losing a UPS or battery set is less “painful” under the 2N and distributed architecture.

When one fails, replacing it with a cold spare is easier. Batteries are the least reliable component of any data center. These rackmount UPS systems are more user-friendly for IT staff than large centralized systems that may be technically challenging and prone to faults.

Cooling Units Installation and Configuration

To ensure a reliable facility, the installation and configuration of cooling units are crucial. DX (Direct Expansion) systems with individual condenser feeds are recommended for reliability. These systems route heat rejection to an exterior location through separate paths. For retrofit scenarios with legacy chillers, dual-coil units are advisable. This approach allows considering the chiller as a utility that may eventually be phased out without compromising system reliability.

While chillers provide cooling, they should be viewed as utilities in the context of a reference architecture. Relying on chillers as shared resources with the building, especially in corporate data centers, can lead to issues. Building maintenance teams may need to fully understand the unique requirements of the data center environment, resulting in unexpected chiller shutdowns for maintenance and disruptions.

In contrast, DX units offer a streamlined and efficient solution. DX units require fewer valves than chillers, as recommended in designing nuclear plants and submarines. The complexity and cost of maintaining chillers and their intricate designs make them less desirable for data centers. Electrically commutated fan-based DX systems have become increasingly popular due to their superior operational expenditure (OPEX), maintenance, and peace of mind.

All mechanical systems should be elevated and gravity-fed to interior or exterior drainage for the condensate pan. Additionally, each system should have an external leak detection sensor to detect and address water leaks promptly. In cases where a condensate pan needs to be pumped, redundant condensate pumps and a dual float-off switch are crucial. Furthermore, an elevated floor dam with leak sensors should be built around the system.

Continuous Cooling

In addition to installation and configuration, maintaining continuous cooling is crucial for optimal facility functioning. The cooling system should respond swiftly to failures, ensuring no more than a 5-degree Celsius change within fifteen minutes. Achieving continuous cooling is straightforward for lightly loaded rooms.

However, in areas with high-density heat loads, the dynamics of heat distribution can change rapidly, even with transient failures. This is observed when companies outgrow their IT closets or when high-density applications are deployed on a larger scale.

Certain measures can be implemented. Promptly starting generators, feeding control systems for HVAC units with UPS (Uninterruptible Power Supply) systems, and providing continuous supplemental power to HVAC systems through flywheel technology contribute to meeting the criteria of a maximum 5-degree Celsius change within any fifteen minutes.

Moreover, the equipment should operate within the guidelines defined by the ASHRAE Class A1 standard for humidity and temperature, ensuring compatibility with IT equipment.

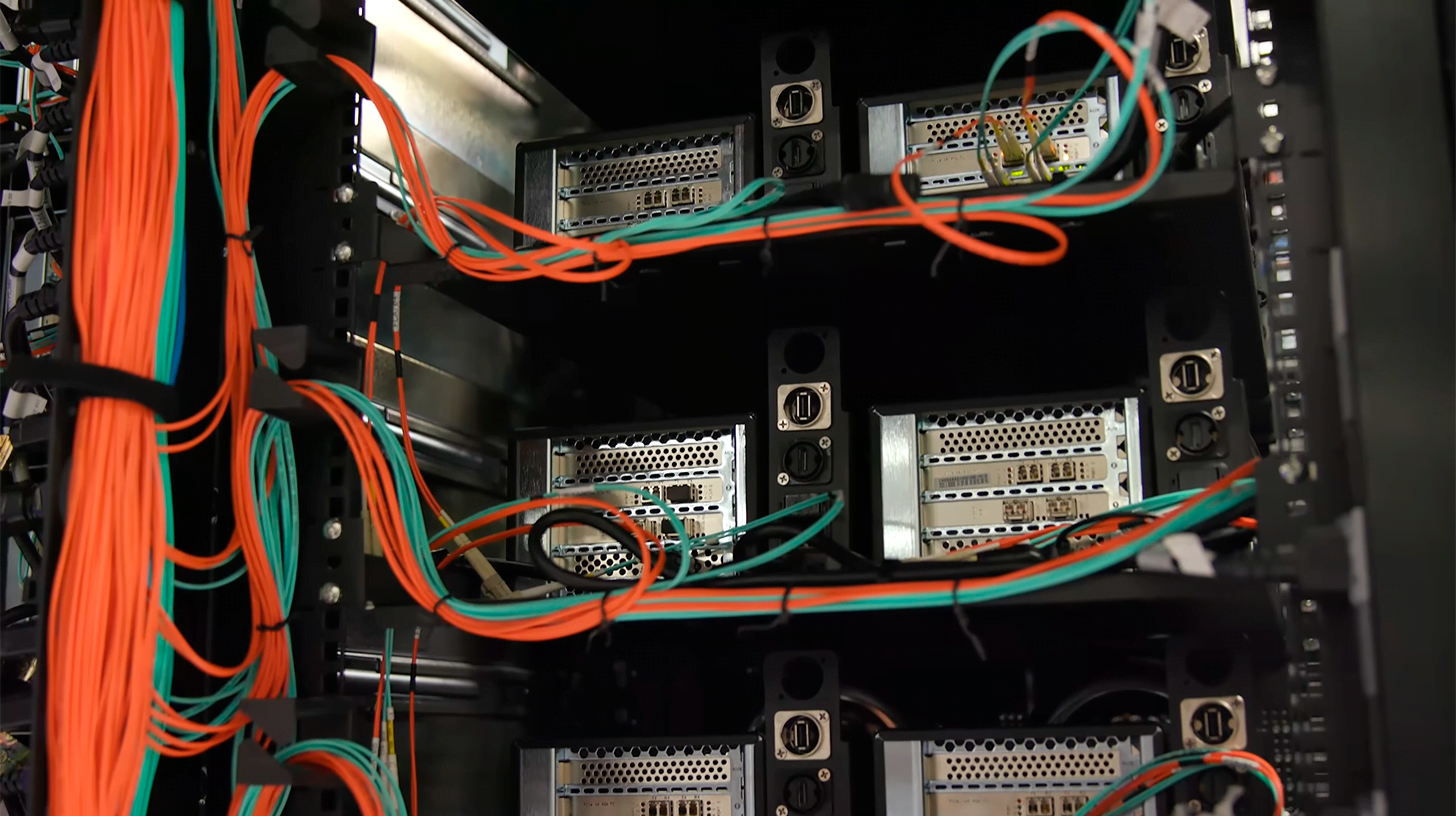

Network

For reliable connectivity, it’s essential to have redundant carriers across multiple entry points and paths. Avoid relying on a single entry point or common conduit. In small data centers, terminate the network into separate racks. In contrast, larger data centers should have two distinct Meet Me Rooms (MMRs).

To enhance resilience, ensure the network path to and from the main pop is redundant. Ideally, one feed should be aerial and the other buried. This diverse setup helps mitigate faults caused by construction or adverse weather conditions. While fiber paths can overlap, it’s preferable to have one aerial and one buried path. Stay tuned for our upcoming article on in-rack reliability and discussions for small to medium-sized businesses. Learn more here.

Fire Suppression

Consider implementing dual interlock or gas-based fire suppression systems, but exercise caution in managing the environment, especially when using gas and VESDA. These systems can have false alarms and potential life safety issues.

Building & Environmental Monitoring System

Ensure the building management system monitors all active capacity components such as switchgear, generators, UPS, and mechanical systems. Environmental sensors throughout the facility provide insights into ambient temperature variations. The building management system should have a daily heartbeat that sends email notifications to responsible parties.

Carefully consider which alarms are critical and which are not. Set up the system accordingly and establish email distribution with your preferred vendor. For non-emergency notifications, use email forwarding, and consider pager notifications for situations that warrant being alerted at 3 AM. Be mindful of alarm fatigue and thoughtfully configure your system for the right balance.

Security Systems

While reliability site engineering considers security systems ancillary, they are nonetheless crucial. Each system must be secure enough to meet the organization’s requirements. This includes safeguards against unauthorized access, data breaches, and other security threats.

Lightning Protection

Lightning protection is essential for building infrastructure, especially for data centers. Install a full set of air terminals to provide comprehensive protection, covering all exterior equipment. Additionally, implement transient voltage surge suppression for inbound feeds from exterior utilities, including the rest of the building, to safeguard the controlled data center environment.

Leak Protection

To maintain the data center’s integrity:

- Ensure sufficient roofing is in place to prevent leaks.

- Remember that rooftop-mounted equipment and penetrations are the primary culprits behind approximately 70% of leaks, particularly during storms.

- Address this vulnerability proactively to minimize or prevent potential damages and disruptions caused by leaks.

Containment and Air Handling

Effective containment and appropriate air handling are critical for a data center’s optimal performance and reliability. Hot aisle containment is preferred as it enhances room comfort and enables pre-cooling for redundancy during transient failures.

With enough clearance space, a partial containment approach with a drop ceiling and ported return grates can be used. In such instances, HVAC/CRAC units can be ducted to draw out hot air efficiently. If this configuration isn’t feasible, a thermally secure containment system with common ducting back to the HVAC systems is necessary.

Another alternative for areas with limited clearance is in-row cooling systems utilizing direct expansion (DX) technology. Coordination between containment and fire protection measures is crucial to ensure compliance with fire safety regulations.

Single Corded Legacy Load

A point-of-use static transfer switch (STS) is necessary at the rack level for any single-corded legacy load. The STS, an automatic transfer switch (ATS), should provide instantaneous transfer between redundant power sources. The STS must accept two unsynchronized sources to ensure an uninterrupted power supply and minimize the risk of downtime.

By adhering to these procedures, you can create a well-constructed data center. Remember that operational sustainability depends on a robust maintenance and operations program. In an upcoming post, we will discuss essential aspects of data center maintenance to ensure the smooth functioning of this critical infrastructure.

Achieving fault tolerance can be complex and costly. In such situations, opting for colocation can be wise, allowing the IT team to focus on higher-end aspects of the technology stack. The physical infrastructure of a data center requires a distinct discipline beyond the scope of the IT team.